随着5G的到来,互联网和通信技术的演进,被谷歌力推的Kubernetes现在已经成为业界操作容器的标准方案。Kubernetes控制对象是容器,那么究竟什么是容器?容器的本质是什么?为什么江湖传闻它相比于虚拟机更轻便?为什么说容器的底层存在安全隐患,隐患从何而来?为什么对于Linux系统自身根本没有容器的概念?理解这些问题对于日后的计算集群运维和开发都有重要的意义。而了解这些,需要从创建子进程说起。

创建一个隔离的进程

Linux系统中提供的系统调用fork创建子进程是不带参数的,而系统调用clone是可以带参数的,clone的参数列表中就包括用来做进程隔离的标志位参数,以下c代码中,父进程调用clone创建子进程后wait阻塞。clone中的第一个参数函数指针container就是子进程的执行单元。系统调用clone使用了5个标志位,用来对新创建的进程做隔离。

| 标志位 | 作用 |

|---|---|

| CLONE_NEWNET | 标示子进程具有独立的网络空间。 |

| CLONE_NEWPID | 标示子进程在自身空间内变为1号进程,具有独立进程树。 |

| CLONE_NEWIPC | 标示子进程具有独立的进程间通信Inter Process Communication。 |

| CLONE_NEWUTS | 标示子进程具有自己的主机名。 |

| CLONE_NEWNS | 标示子进程享有独立文件系统结构。 |

1 |

|

创建子进程前,查看系统的进程树,网络设备,主机名,进程间通信IPC。

1 | root@vbox:/share# pstree |

编译执行,创建子进程。注意命令提示符的变化。

1 | root@vbox:/share# ./main |

创建子进程后,查看系统的进程树,网络设备,主机名,进程间通信IPC。可以看到,此进程认为自己是1号进程,网络设备只包含一个没有开启的loopback,主机名变成了container,进程间通信中共享内存没有了之前的共享内存段,至此已经相当于进入了一个容器内部运行。

1 | container:/share# pstree |

为进程构建独立网络

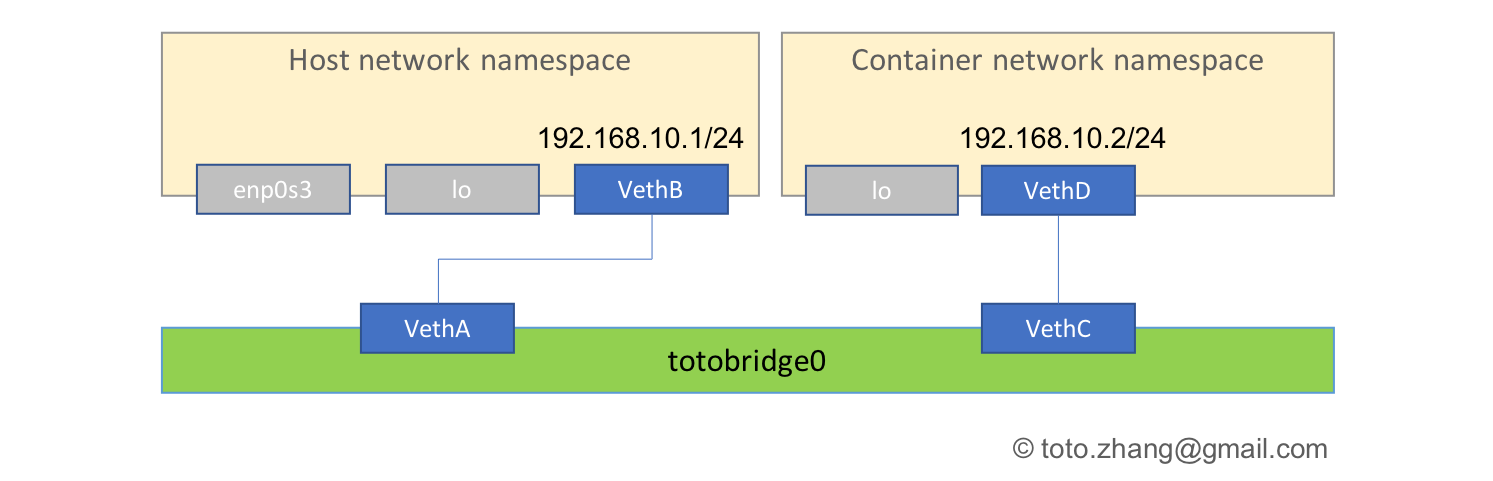

接下来,再继续为子进程构建一个类似于Docker的网络连接。Docker一般会创建一个叫做bridge0或者docker0的网桥,用来连接多个“容器”,以及“宿主机”,以实现Docker独立网络空间的效果。也可以按照类似的方法构建一个NAT网络。

1 | #在父进程空间执行 |

以上指令执行完成后,就搭建起了一个上图示意的网络拓扑结构。此时查看两个网络空间的内容如下:

1 | #宿主机中的网络设备 |

现在可以看到,容器子进程的行为已经类似虚拟机的行为了。容器的本质实际上就是Linux系统中被隔离的子进程,所以容器运行在宿主机同一个内核上,这种情况安全性相比虚拟机会比较弱。实际上,虚拟机是用软件模拟出一个完整的图灵机再运行操作系统,在其上再运行应用程序,虚拟机之间的应用是不共享内核协议栈的,而容器非常容易可以控制共享哪些内容。这也是kubernetes的pod可以带多个容器的实现原理。

参考链接:http://crosbymichael.com/creating-containers-part-1.html