TIME_WAIT state is the most complicated status among the state transmit of TCP protocal, at first glance its existence is not nessesary, some of the optimization technique eliminate the TIME_WAIT state arbitrarily. But as a part of a protocal which has been applied for so many years, there must be some reason. At least , before eliminating it, we should know the details about it, just as Richard Stevens referred in his book, “Instead of trying to avoid the state, we should understand it”. So what is a TIME_WAIT state TCP endpoint waiting for? Why the endpoint transimit to this state? What’s the problems it brought us? Any way that we can keep away from these problems?

How long does TIME_WAIT state last?

According to TCP specification, once a endpoint is in the TIME_WAIT state, it recommend that the endpoint should stay in this state for 2MSL to make sure the remote endpoint receiving the last FIN segment as much as possible. Once a endpoint is in TIME_WAIT state, the endpoints defining that connection cannot be reused.

2MSL is [Maximum Segment Lifetime] × 2, most of the Linux systems define the MSL 30s. 2MSL is 1 minutes. As we known, The longest life time for a IP packets stay alive in the network is marked by TTL, and TTL is stand for the maximum hops, so there’s no close relationship between the MSL and TTL. In most version of the Linux kernel, MSL is hard coded, and the setting is 1 minutes. But there’s also some other operation system that provided the configuration interface for this value.(tcp.h) 1

2//include/net/tcp.h

#define TCP_TIMEWAIT_LEN (60*HZ) /* how long to wait to destroy TIME-WAIT state, about 60 seconds */

Transmit to TIME_WAIT state

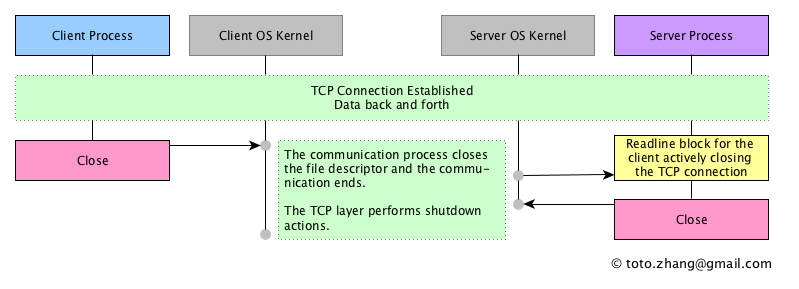

According to the state transfermation of TCP protocal, the endpoint who initiate the FIN will enter the TIME_WAIT state, which means that no matter it’s a client or server, who sent FIN, who TIME_WAIT. But on the other hand, client and server play a totally different role during the communication process. In another word, whether the client or the server transmitting to TIME_WAIT first, will lead to different consequences for the communication. Here are some details. In case 1~3, it’s the client who initiates the FIN. In case 4~5, it’s the server who asks for disconnect first.

Client initiates the FIN request first

Case 1 Client initiates the FIN segment

First, I start the server process, run the client to connect to the server and do the application request for twice. Actually, the client sends two FIN during this operations. After this, I run the netstat to observe the printouts.

Printout on server 1

2

3

4

5

6

7

8

9

10

11

12#Server start, TCP port

toto@guru:~$ netstat -ant | grep 1982

tcp 0 0 0.0.0.0:1982 0.0.0.0:* LISTEN

#Client runs twice, the server side's printout

toto@guru:~$ server

Receive message from client process on 10.16.56.2: request,hostname

Receive message from client process on 10.16.56.2: request,hostname

#After client disconnect server for twice, the server tcp status.

toto@guru:~$ netstat -ant | grep 1982

tcp 0 0 0.0.0.0:1982 0.0.0.0:* LISTEN

Printout on client 1

2

3

4

5

6

7

8

9

10#Run the client twice

toto@ClientOS:~$ client 10.22.5.3 1982

response,guru

toto@ClientOS:~$ client 10.22.5.3 1982

response,guru

#Check the tcp status

toto@ClientOS:~$ netstat -ant | grep 1982

tcp 0 0 10.16.56.2:55725 10.22.5.3:1982 TIME_WAIT

tcp 0 0 10.16.56.2:55726 10.22.5.3:1982 TIME_WAIT

The client process initiates FIN request for twice. Notice that, at the second time when the client connects to the server, it goes well, no rejection from the server. Because on the client the tcp port 55725 is time wait, the client os kernel assigns a brand new ephemeral port 55726 for the second connection. Most of the time, when the client raises a tcp connection request, a brand new client side port is assigned to the process. The ephemeral ports range is configurable. In Linux we can check it as this:

1 | toto@guru:~$ cat /proc/sys/net/ipv4/ip_local_port_range |

This case indacates that if a client initiates a FIN to finish the tcp connection. It will not take any effect to the server side, also not take any effect to itself. But during the design activity of a communication software system, if plenty of tcp connections are required at a very short period, and disconnect at a very short time, the client sides ports will mostly be exhausted. This situation should be considered and avoided. The alternative ways like tcp long term connection, tcp connection pool, or RST to disconnect should be used.

Case 2 Client side binding to a local port init the FIN

We bind a client to a specific local port,

1 | //bind a port to the sockfd |

Modify the code, recompile and run the client twice.

Server printout 1

2

3

4

5

6#Server

toto@guru:~$ netstat -ant | grep 1982

tcp 0 0 0.0.0.0:1982 0.0.0.0:* LISTEN

toto@guru:~$ server

Receive message from client process on 10.16.56.2: request,hostname

Client printout 1

2

3

4

5

6

7

8#Client

toto@ClientOS:~$ client 55555 10.22.5.3 1982

response,guru

toto@ClientOS:~$ client 55555 10.22.5.3 1982

bind error: Address already in use

toto@ClientOS:~$ netstat -ant | grep 1982

tcp 0 0 10.16.56.2:55555 10.22.5.3:1982 TIME_WAIT

It indicates that once a tcp connection in TIME_WAIT state, it cannot be recreated.

Case 3 Client side binding to a local port init a FIN to a server, then this client init another SYN request to a brand new server process

Run two server processes seperately on the host 10.22.5.3 and host 10.16.56.2. On the host 10.16.56.2, run client twice, we expect to establish two tcp connections, one is {10.16.56.2,55555,10.22.5.3,1982}, the other is {10.16.56.2,55555,10.16.56.2,1982}, we assume the two connections will be established successfully. But actually not on Ubuntu linux.

Client printout 1

2

3

4

5

6

7toto@ClientOS:~$ client 55555 10.22.5.3 1982

response,guru

toto@ClientOS:~$ client 55555 10.16.56.2 1982

bind error: Address already in use

toto@ClientOS:~$ netstat -ant | grep 1982

tcp 0 0 10.16.56.2:55555 10.22.5.3:1982 TIME_WAIT

Obviously, they are two different tcp connections. Ubuntu linux forbid the usage of port “55555” to start a connection. This is not reasonable. We can only wait for the time out, and init the second connection.

Client printout 1

2

3

4

5

6

7toto@ClientOS:~$ client 55555 10.16.56.2 1982

response,ClientOS

toto@ClientOS:~$ client 55555 10.22.5.3 1982

bind error: Address already in use

toto@ClientOS:~$ netstat -ant | grep 1982

tcp 0 0 10.16.56.2:55555 10.16.56.2:1982 TIME_WAIT

Server init the FIN request

Case 4 Server init the FIN, then restart

TIME_WAIT brings kinds of problem to the server, and it will have a much greater influence to the communication than TIME_WAIT on the client’s side. As a communication system engineer, the TIME_WAIT state on the server should bring our attention with a higher priority.

Start the server process, connect to it with two different clients. The two connections established successfully. Kill the server.

1 | #Server |

Try to restart the server, failed.

1 | #Server |

If the server init the FIN, the tcp endpoint on the server side enters the TIME_WAIT state. If a server is serving a huge amount of clients, all of the connections’ state will transmit to TIME_WAIT at that moment.

Case 5 Server init the FIN, client which binding to a specific port init the SYN for twice

Bind the client tcp port to 55555, connect the server twice.

1 | #Client |

Observe the captured packets, notice the 8th packet marked black by wireshark, TCP port numbers resued,it tells that the TIME_WAIT tcp port on the server side doesn’t resist the connection request from the client. The TIME_WAIT tcp port can be reused at this situation.

Why the TIME_WAIT required?

Why setting the 2MSL? We assume there’s no TIME_WAIT, what will happen?

Senario 1

Suppose it’s alowed to create two identical (4-tuple) tcp connection at the same time. The 2nd connection is an incarnation of the first one. If there are packets delayed during the first connection, but still alive until the incarnation connection is created. (Because the waiting time is not long enough to make sure the network discard the delayed packets.), this will bring some unknown errors into the network.

Although it’s a event of small probability, there’s still possibilities. The protocal itself has already get some preventive measures to keep this situation from happenning. First, during 3 way handshakes, ISN is one of the measures, second, the client tcp port is assigned by the os kernel most of the time with an ephemeral port which ensures that a new connection to the same host with a different 4 tuple id.

Senario 2

Suppose a tcp disconnection procedure is in processing. The client sends a FIN, receive a ACK. But the next FIN from the server or the last ACK sent to server is lost in the network. What will happen next if the client doesn’t wait for 2MSL? The server resends the FIN, and the client thinks that the communication is over, and answer a RST to the last FIN, the server will get a RST and think “Shit, this is not a successful communication”.

Dealing with the problems TIME_WAIT brings

Before optimization activities against TIME_WAIT, you should think it over, and consider every relevant details to ensure not bringing more additional problems.

Stradegy 1 Change the TIME_WAIT time setting

Refer to OS Manuals, there’s no setting in Ubuntu at the time this one is writing.

Stradegy 2 Socket parameter SO_REUSEADDR

After call the socket function, set the SO_REUSEADDR, the process will discard the TIME_WAIT state. 1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17int Socket(int family, int type, int protocol)

{

int sockfd = 0;

int optval = 1;

// Get a socket

if ((sockfd = socket(family, type, protocol)) < 0){

err_sys("socket error");

}

// Eliminates TIME_WAIT status

if (setsockopt(sockfd, SOL_SOCKET, SO_REUSEADDR, (const void *) &optval, sizeof(int)) < 0){

return -1;

}

return sockfd;

}

Stradegy 3 Ensure the client send the first FIN

Who send FIN first, who transmit to TIME_WAIT, there are extra resource hanging during this state. Comparing with clients, the resources on the server is much more expensive and valuable.

Stradegy 4 Disconnection with RST

No matter who sends the RST segment to disconnect, no one will transmit to TIME_WAIT, the TCP data structure will release at once. And we write some addtional code on the application layer to ensure that the communication is successful and effective.

Stradegy 5 Change the parameter tcp_tw_reuse,tcp_tw_recycle

Refer RFC1122, tcp_tw_reuse code,tcp_tw_recycle code for more information.